Kubernetes vs Docker are two terms that you have almost undoubtedly encountered; however, if you are new to the realm of containerization, you may not fully comprehend them just yet. Kubernetes vs Docker are two container management systems that, apart from their distinct characteristics, also exhibit certain commonalities. This article shall undertake an examination of the parallels and distinctions existing between Kubernetes vs Docker, in addition to emphasizing the manifold benefits provided by each. In order to navigate the containerization landscape, one must have knowledge of the distinction between Kubernetes vs Docker.

An Overview of Kubernetes

[lwptoc]

Kubernetes is an open-source and cost-free container orchestration platform that facilitates the deployment, management, and scaling of containerized applications by automating and streamlining a substantial portion of the labor-intensive processes that are traditionally involved. Kubernetes, commonly known as K8s, is a highly utilized open-source platform designed to facilitate the coordination of container runtime systems across an interconnected collection of resources. Although Docker is not a prerequisite for utilizing Kubernetes, its implementation remains feasible. In order to navigate the containerization landscape, one must have knowledge of the distinction between Kubernetes vs Docker.

Google required an innovative strategy at the time of the system’s development in order to manage billions of containers per week. In 2014, Google initially introduced Kubernetes as an open-source platform. At present, the orchestration utility that dominates the market and is the industry standard for deploying containers and distributed applications is in use. Google’s announcement states that the primary design objective of the containerization system is to streamline the deployment and administration of intricate distributed systems, while also leveraging the improved utilization capabilities that containers provide.

Describe the Kubernetes execution procedure.

Architects delineate the interoperability and functionality of every component within a multi-container application they design. They also figure out the best number of times for each part to be run and come up with a complete solution to problems that might come up, like multiple users logging in at the same time. They maintain one or more text files containing a log of their containerized application components, which may contain a configuration, and store their thoughts in a local or remote container registry. They “apply” the configurations to the Kubernetes cluster so that the application can become operational. An examination of the complexities between Kubernetes vs Docker to optimize orchestration

Unless otherwise specified, the analysis, implementation, and ongoing maintenance of this configuration are all the responsibility of Kubernetes. It:

- Verify that the requirements of the configuration are consistent with those of all other operational application configurations on the system.

- Ascertains which resources are appropriate for the functioning of the recently generated containers (for certain containers, for example, graphics processing units, or GPUs), which may not always be present on hosts.

- Facilitates the operation of the application by aiding in the retrieval of container images from the registry, the container launch process, and the container connection to system resources, including persistent storage.

- Then, Kubernetes will attempt to make corrections and adjust itself accordingly if actual events deviate from the desirable conditions while it is monitoring everything. To illustrate, in the event that a container encounters a malfunction, Kubernetes will endeavor to restore it. In order to navigate the containerization landscape, one must have knowledge of the distinction between Kubernetes vs Docker.

- Should the server hosting the underlying service experience a failure, Kubernetes will seek alternative resources to sustain the execution of the containers hosted on the failed node. If there is an unexpected increase in application traffic, Kubernetes can add more containers as long as they follow the rules and limits set out in the configuration.

What are several potential implementation strategies for Kubernetes?

The integration of Kubernetes into one’s environment presents a fundamental benefit, specifically in the context of optimizing cloud-based application development: it streamlines the process of scheduling and executing containers across collections of physical or virtual computers. This is particularly beneficial when optimizing cloud-based application development. An examination of the complexities between Kubernetes vs Docker to optimize orchestration

Overall, it significantly streamlines the process of installing and utilizing container-based infrastructure in operational environments. Furthermore, due to Kubernetes’ fundamental goal of streamlining operational processes, a multitude of operations that are viable on alternative application platforms or management systems can be executed on your containers. This is feasible on account of Kubernetes’ deliberation on these alternatives.

By utilizing the patterns that Kubernetes offers, developers can effectively build cloud-native applications utilizing Kubernetes as their runtime platform. To construct services and applications that operate on containers, a Kubernetes developer must employ patterns.

The following are examples of probable Kubernetes applications:

- With the proper tools, containers can be orchestrated across multiple hosts.

- You should optimize the utilization of your hardware in order to derive the greatest possible benefit from the resources necessary to operate your enterprise applications.

- Oversee and mechanize the application deployment and maintenance process.

- Install storage and mount the file system prior to attempting to execute stateful programs.

- Concurrently augmenting the resources utilized by containerized applications and their overall capacity

- To guarantee that deployed applications consistently operate as intended, it is critical to implement a declarative approach to service management.

- The incorporation of self-diagnosis and self-treatment capabilities into application design enables the implementation of auto-placement, auto-restart, auto-replication, and auto-scaling.

On the other hand, Kubernetes depends on these independent organizations’ synchronized services working as intended. Integrating additional open-source initiatives will allow for the full realization of its capabilities. An examination of the complexities between Kubernetes vs Docker to optimize orchestration

These essential components consist of the following, among other things:

- The Docker Registry is an example of this type of registry.

- Networking-related technologies, including OpenvSwitch and intelligent peripheral routing,

- Programmatic applications that utilize telemetry include Elastic, Kibana, and Hawkular, among others.

- Multitenancy layer-based applications, including LDAP, SELinux, RBAC, and OAuth, collectively contribute to the establishment of security measures.

- With the help of the built-in Ansible playbooks, cluster lifecycle management and installation can be done automatically.

- Services, through the utilization of an extensive collection of standardized application templates.

Where can one locate the Kubernetes installation?

In addition to being operationally portable across numerous Linux operating systems, it provides worker node support for Windows Server. In a data center, private cloud, or public cloud, a Kubernetes cluster may comprise thousands or even hundreds of bare-metal or virtual servers. Furthermore, it finds applications in developer workstations, ultra-compact mobile and Internet of Things devices and appliances, peripheral servers, microservers such as Raspberry Pis, and various other devices and appliances. In order to navigate the containerization landscape, one must have knowledge of the distinction between Kubernetes vs Docker.

Kubernetes possesses the capability to provide a platform that exhibits functional consistency across all of these infrastructures, contingent upon the implementation of suitable product and architectural choices and the execution of adequate planning. This feature enables seamless migration of configurations and applications that were developed and evaluated on a desktop Kubernetes environment to larger deployments, including those on the edge, in production, or in the Internet of Things. As a consequence, businesses and organizations have the potential to establish “hybrid” and “multi-clouds” spanning various platforms. This enables them to efficiently and cost-effectively address capacity concerns, all while avoiding any commitment to a particular cloud service provider.

To provide further clarification, what is a Kubernetes cluster exactly?

The K8s’ architecture is uncomplicated. By utilizing the control plane, direct interaction with the nodes that house your application is unnecessary. Beyond overseeing the scheduling and replication of clusters of containers called pods, this plane also offers an application programming interface (API) for alternative modes of communication. The command-line interface Kubectl facilitates communication with the API. This exchange may occur with the intention of disclosing comprehensive information pertaining to the present condition of the infrastructure or to exchange updates on the intended application.

Let’s examine each of the various components

Each node within your distributed application is responsible for hosting a specific component. To accomplish this, it utilizes Docker or similar container technology, such as CoreOS’s Rocket. Furthermore, the nodes execute two software applications, namely kube-proxy and kubelet. The former component grants access to the currently running application, while the latter component is responsible for receiving commands from the K8s control plane. It is also feasible to operate Flannel, a network fabric designed for containers that are supported by etcd, on individual nodes. An examination of the complexities between Kubernetes vs Docker to optimize orchestration.

The control plane comprises various components, including the Kubernetes controller manager (kube-controller-manager), Kubernetes scheduler (kube-scheduler), and Kubernetes API server (kube-apiserver), as described in The Grand Tutor. Furthermore, it functions as a key-value store that ensures high availability and employs the Raft consensus algorithm to streamline the processes of shared configuration and service discovery.

Which elements constitute the Kubernetes-native infrastructure in its entirety?

In an increasing number of scenarios, bare metal servers are being utilized in conjunction with pre-existing virtual infrastructure to support the overwhelming majority of on-premises Kubernetes installations. This represents a rational progression in the evolution of data center infrastructure. Although other technologies are employed to oversee infrastructure resources, Kubernetes serves as the foundational framework utilized to manage the lifecycle and deploy containerized applications. In order to navigate the containerization landscape, one must have knowledge of the distinction between Kubernetes vs Docker.

However, what would transpire if the data center were designed from the foundation up with the explicit purpose of seamlessly integrating containers, including the infrastructure layer?

In order to configure and oversee bare metal servers and software-defined storage, Kubernetes would be implemented. This would endow the infrastructure with similar functionalities to containers, including self-installation, self-growth, and self-problem resolution. This term is utilized to denote Kubernetes-native infrastructure.

The benefits of utilizing Kubernetes

The fact that Kubernetes, often called the “Linux of the cloud,” is the most extensively used container orchestration platform should not come as a surprise. This can be attributed to several factors, which encompass the subsequent:

Methodical computerized processes

Kubectl is an application programming interface (API) and sophisticated command-line interface (CLI) utility that is built into Kubernetes. The system is primarily accountable for the automation of labor-intensive procedures pertaining to container management. The controller pattern implemented in Kubernetes guarantees the precise and punctual execution of tasks by containers and applications. Examine the merits and subtleties of the Kubernetes vs Docker deployment approaches.

The process of conceptualizing the foundational infrastructure

Kubernetes assumes the responsibility of resource management on your behalf once it has been granted access to them. Consequently, software developers are liberated to direct their attention toward the coding of applications, disregarding the underlying infrastructure comprising networking, computation, or storage.

Monitoring and surveillance of health services

A state comparison is performed by Kubernetes between the operating environment in its present configuration and the desired state. Automated health checks are performed on services, and containers that have encountered difficulties or become blocked are restarted. Instances that are prepared and operational are the only ones granted access to the services offered by Kubernetes.

Docker Clarification

Describe Docker

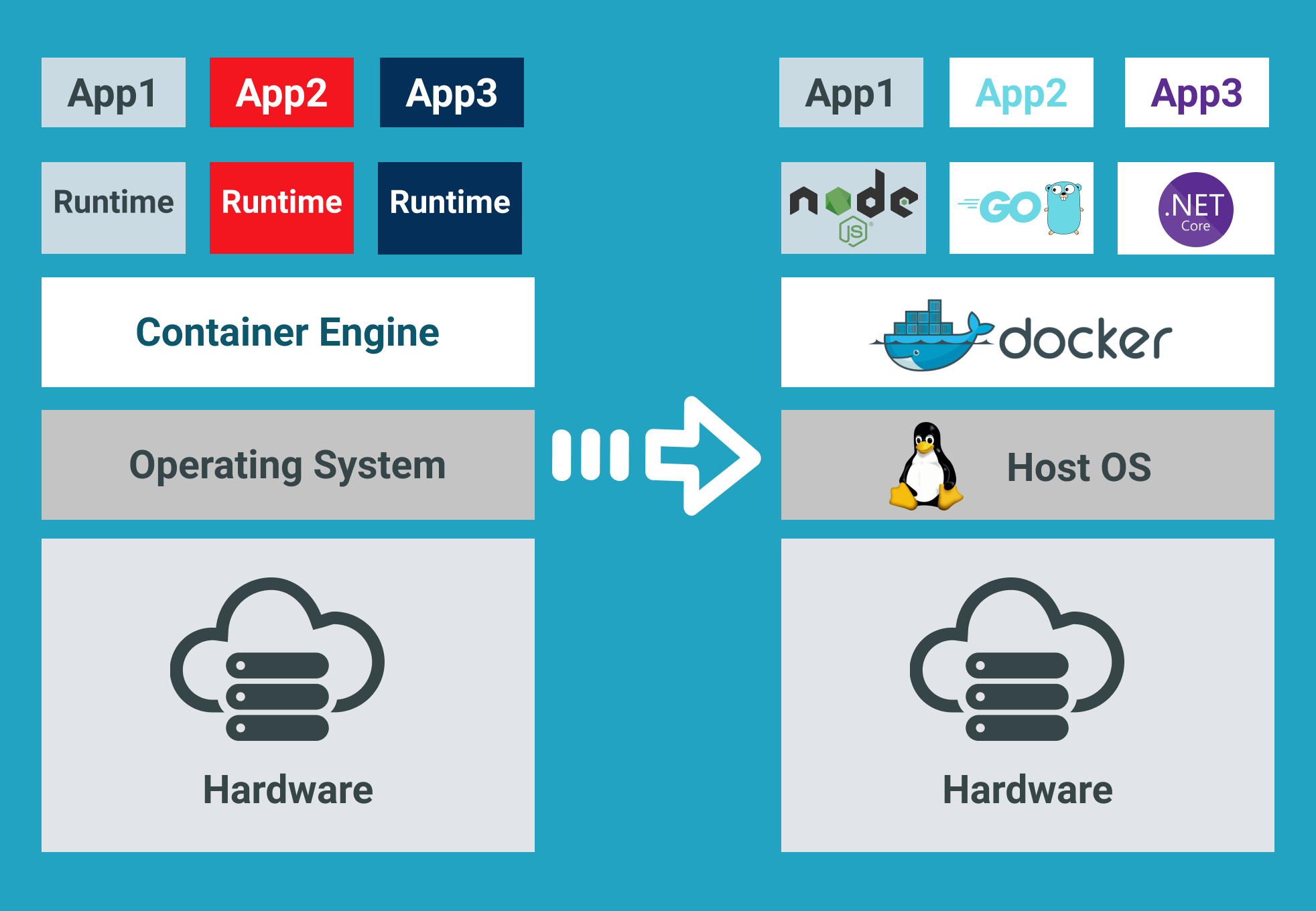

Container administration, deployment, and construction can be simplified through the utilization of Docker, an open-source containerization platform. Docker simplifies the process for programmers to encapsulate their applications and execute them within containers. Containers are environments that possess minimal isolation between nodes and allow developers to execute the necessary dependencies for their applications. Due to the fact that Docker partitions applications away from the underlying infrastructure, the delivery of applications can be expedited. Docker reduces the time between the time code is written and the time it is implemented in production by facilitating rapid code delivery, testing, and deployment. Examine the merits and subtleties of the Kubernetes vs Docker deployment approaches.

How do the distinct Docker containers function?

Hosted on Docker, containers are lightweight and portable environments. These environments facilitate the packaging and execution of applications, including all necessary dependencies, in a single operation by programmers. Multiple applications can be administered and isolated on a single host machine if each container executes an independent process. This facilitates the consolidation of administrative responsibilities.

Docker’s Numerous Advantages for Organizations

The following are some of the advantages that can be obtained through the utilization of Docker:

- Utilizing Docker containers facilitates the creation of consistent and scalable environments by developers by providing them with the ability to establish isolated and predictable environments with greater ease. As a consequence, productivity is enhanced as the time required to rectify errors is diminished, thereby freeing up resources for the introduction of novel user features. Docker’s internal administration of all configurations and dependencies ensures that the staging and production environments are identical. In periods of low demand, scaling down entails a reduction in both resource consumption and the costs associated with them. This facilitates the provision of supplementary resources that may be required to meet periods of increased demand. Examine the merits and subtleties of the Kubernetes vs Docker deployment approaches.

- Docker enables the deployment of images in a matter of seconds, which can have a significantly and positively affecting effect on productivity and is therefore reasonably priced. A single image is sufficient for the creation of a Docker container. Historically, infrastructure establishment and provisioning, which entails the allocation of servers and other resources, have been laborious and time-consuming procedures that require considerable focus. Conversely, by encapsulating distinct processes within their respective containers, one can subsequently allocate those processes to other applications. The aforementioned leads to an immediate acceleration of the deployment procedure.

- Kubernetes vs Docker are two terms that you have almost undoubtedly encountered; however, if you are new to the realm of containerization, you may not fully comprehend them just yet. Docker and Kubernetes are two container management systems that, apart from their distinct characteristics, also exhibit certain commonalities. This article shall undertake an examination of the parallels and distinctions existing between Kubernetes vs Docker, in addition to emphasizing the manifold benefits provided by each.

- Another advantageous feature of Docker is its portability, which is an additional benefit. Consider that you have successfully tested a containerized application and wish to deploy it to any Docker-enabled system. It is an indisputable certainty that the utilization of the application will not exhibit any obstacles. Examine the merits and subtleties of the Kubernetes vs Docker deployment approaches.

- Potential for growth: Docker provides the capability to generate containers in accordance with the specifications of a given application. Docker’s portability and lightweight nature enable the scalability or decommissioning of applications in accordance with the needs and demands of an organization. Subsequently, the administration of one’s workload is rendered more streamlined. The utilization of multiple containers provides the user with an extensive array of container management alternatives.

Kubernetes vs Docker are being integrated; Kubernetes in comparison to Docker

Kubernetes, when utilized in conjunction with Docker integration, functions as an orchestrator for Docker containers. Kubernetes possesses the capability to oversee and mechanize the deployment, scaling, and operation of Docker containers. Moreover, it possesses the capacity to deploy said containers.

Kubernetes possesses the functionality to generate and oversee Docker containers, allocate their execution to the suitable nodes within a cluster, and adjust the number of containers dynamically to accommodate fluctuations in demand. Kubernetes can also facilitate the development and deployment of complex containerized applications through its management of Docker container networking and storage. This facilitates the ability of Kubernetes to increase the accessibility of containerization.

An individual can leverage the manifold advantages provided by Kubernetes vs Docker through the integration of these two platforms. Docker facilitates the development of containerized applications, whereas Kubernetes offers a resilient infrastructure for the administration and expansion of such applications. Additionally, Docker streamlines the distribution procedure for containerized applications. They possess the capability to collectively offer a comprehensive solution to the challenge of overseeing containerized applications that are scalable. Examine the merits and subtleties of the Kubernetes versus Docker deployment approaches.

Integration between Kubernetes vs Docker has several benefits; compare them

The amalgamation of Kubernetes and Docker presents several advantages, which comprise the subsequent:

- Enhanced container orchestration Kubernetes offers more sophisticated orchestration capabilities than Docker Swarm, including automatic scalability and self-healing.

- These functionalities are incorporated into the container orchestration enhancement.

- Kubernetes is capable of autonomously scaling containerized applications in accordance with demand, thereby optimizing the utilization of resources.

- Kubernetes attains these results by intelligently allocating resources to containerized applications, thereby enhancing performance and decreasing waste.

Use cases across their diverse applications

The subsequent organizations have achieved favorable outcomes by integrating Kubernetes and Docker into their operational frameworks:

- Kubernetes vs Docker is responsible for the administration of Airbnb’s microservices architecture, which has over two thousand unique services.

- The platform utilized by Buffer for the deployment and management of containerized applications is Kubernetes.

- Kubernetes is employed to administer and oversee the scalability of containerized services provided by Box. Understanding the intricacies of Kubernetes vs Docker in the context of container management

Characteristics such as high availability, portability, and the capability to decompose applications into their constituent elements serve as unifying forces between Kubernetes vs Docker. Although Docker possesses the ability to function independently, its integration with Kubernetes has the potential to enhance the availability, scalability, and efficacy of containerized applications. Through the implementation of these technologies in tandem, these organizations are capable of monitoring and managing their containerized applications at an enormous scale.

Which platform is better, Kubernetes or Docker? Comparing Kubernetes vs Docker

Given the aforementioned, and the fact that both Kubernetes vs Docker Swarm are container orchestration platforms, which one would you select?

Docker Swarm generally necessitates a reduced quantity of initialization and configuration labor in self-managing infrastructure, as opposed to Kubernetes. This platform offers comparable benefits in comparison to Kubernetes. These include the ability to deploy applications via declarative YAML files, automate service scalability to the desired level, distribute traffic across containers in the cluster, and enforce security and access controls across the entire service. Docker Swarm may present itself as a viable substitute if one is responsible for infrastructure management, possesses a restricted portfolio of active workloads, or does not necessitate a particular functionality offered by Kubernetes. If you find yourself in any of the following situations, you ought to contemplate the utilization of Docker Swarm.

Kubernetes presents enhanced adaptability and an expanded repertoire of functionalities, notwithstanding the initial complexity associated with its configuration. In addition, a substantial and active community of open-source developers supports it. Kubernetes offers preconfigured observability within containers, network ingress management capabilities, and deployment strategy flexibility. By leveraging the managed Kubernetes services offered by prominent cloud providers, users can not only streamline the initiation process of the platform but also take advantage of cloud-native functionalities such as auto-scaling. In the event that an organization oversees a substantial volume of workloads, necessitates cloud-native interoperability, and has multiple teams that demand heightened service isolation, Kubernetes is the most probable platform to contemplate.

Compass, in addition to the coordination of Docker versus Kubernetes container usage

It is mandatory to utilize a tool for the purpose of scaling the complexity management of your distributed architecture, irrespective of the container orchestration solution that you ultimately opt for. Atlassian Compass is an extensible developer experience platform designed to streamline the engineering output and team collaboration processes by consolidating disparate information into a centralized and readily accessible location. By utilizing the Component Catalog, Compass can assist you in managing your microservice proliferation. Additionally, it can provide details regarding the entirety of your DevOps toolchain including Atlassian Forge-built extensions. Additionally, scorecards can be utilized to aid in the evaluation of the health of your software and the establishment of best practices.

Additionally, Docker possesses the advantageous quality of portability, which serves as an addition. Consider that you have successfully tested a containerized application and wish to deploy it to any Docker-enabled system. It is an indisputable certainty that the utilization of the application will not exhibit any obstacles. Aspects of the container management complexities concerning Kubernetes vs Docker are unveiled.

Capacity for further development:

With the assistance of Docker, it is feasible to generate containers in accordance with the requirements of your application. Docker’s portability and lightweight nature enable the scalability or decommissioning of applications in accordance with the needs and demands of an organization. Subsequently, the administration of one’s workload is rendered more streamlined. The utilization of multiple containers provides the user with an extensive array of container management alternatives. Aspects of the container management complexities concerning Kubernetes vs Docker are unveiled.

In Conclusion,

Following a comprehensive analysis of containerization, Docker and Kubernetes emerge as noteworthy competitors, distinguished by their unique attributes and functionalities. Kubernetes demonstrates a more extensive array of capabilities in comparison to Docker, encompassing orchestration and container administration on a large scale in addition to container construction and packaging. A comprehensive understanding of the distinctions and similarities between these two instruments is crucial for navigating the containerized environment. The purpose of this article was to elucidate Docker and Kubernetes through an examination of their individual strengths and the advantages they offer when used in tandem. Acquiring a thorough understanding of the complexities inherent in containerization technologies will empower users to make informed decisions that align with their specific needs and objectives as the discipline evolves. Aspects of the container management complexities concerning Kubernetes vs Docker are unveiled.

FAQs: Kubernetes vs Docker

1. What distinguishes Kubernetes vs Docker from one another?

Docker is a platform for containerization that prioritizes the packaging and development of containers. On the other hand, Kubernetes operates as an orchestration tool that focuses on overseeing the deployment and scalability of containerized applications, including those built with Docker.

2. Docker and Kubernetes are synergistic allies in what ways?

A common scenario involves the collaboration of Kubernetes vs Docker, with Docker handling containerization and Kubernetes supervising the scalability and orchestration of containers within a cluster. Aspects of the container management complexities concerning Kubernetes versus Docker are unveiled.

3. What are the advantages offered by Docker?

By assuring a consistent environment throughout container construction, encoding, and distribution, Docker enables the efficient execution of applications in a variety of environments.

4. How does Kubernetes differentiate itself?

Kubernetes exhibits its expertise in container orchestration by automating the administration, scaling, and deployment of containerized applications. It guarantees optimal utilization of resources, consistent availability, and tolerance for defects within a cluster.

5. How should I go about deciding between Kubernetes vs Docker for my project?

The choice between Kubernetes vs Docker is determined by the specific requirements of the specified project. Docker could potentially meet the primary criteria for container construction and distribution. In the realm of deploying intricate, extensive distributed applications, Kubernetes often emerges as the more favorable alternative. Aspects of the container management complexities concerning Kubernetes versus Docker are unveiled.

6. Is it feasible to operate Docker independently of Kubernetes, or does the inverse apply?

Both Kubernetes vs Docker have the capability to operate autonomously. For containerization objectives, Docker can serve as a viable substitute for Kubernetes in smaller-scale initiatives. On the contrary, Kubernetes possesses the capability to oversee containers constructed utilizing containerization tools other than Docker.

Leave a Reply